RAG Integration

RAG stands for retrieval augmented generation. And it does what the name says. It augments your prompt with relevant data from your custom data store. The data is defined by a near search executed against the connected vector store. Learn more about ingesting and using vector stores here.

Example

In a sales management system, a sales team embeds and ingests their quarterly sales data, including regions and product categories, into the vector database. The model retrieves historical sales data, market trends, and competitive landscape information to enhance its predictive capabilities. It then generates a comprehensive sales strategy report:

"Based on current and historical data, the Northeast region is showing a high demand for Product Category A, while the Midwest region is underperforming in Product Category B. To maximize revenue, consider allocating more resources to the Northeast for Category A and launching targeted promotions in the Midwest for Category B. Also, competitor X has recently reduced prices in the West; consider price-matching to maintain market share."

This report serves as actionable intelligence, aiding the sales team in making data-driven decisions for resource allocation and strategy.

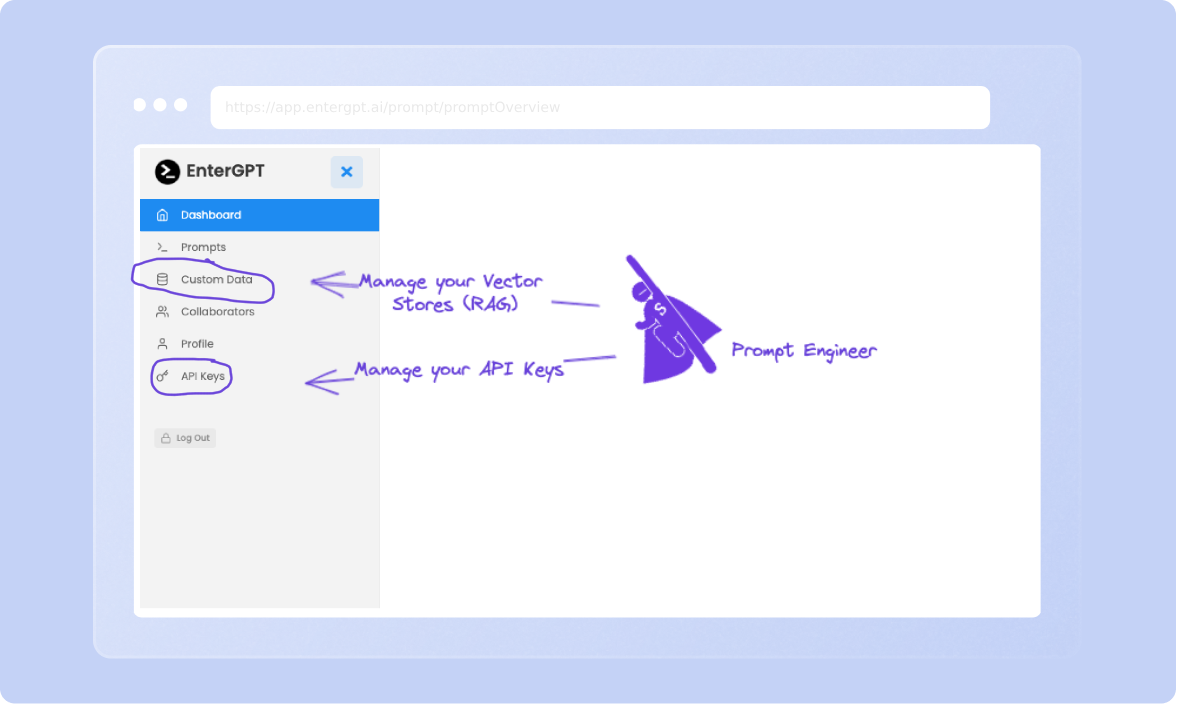

Manage Access Keys and Custom Vector Stores in EnterGPT

Connecting your Vector Store

In the menu section "Custom Data", press the button "+ Create Bucket". You are asked to name your bucket and give it a description.

You can choose an embedding model from OpenAI or HuggingFace. If you are on a free Nter.ai account, you must choose OpenAI for because you are getting connected to a pinecone index with OpenAI embeddings

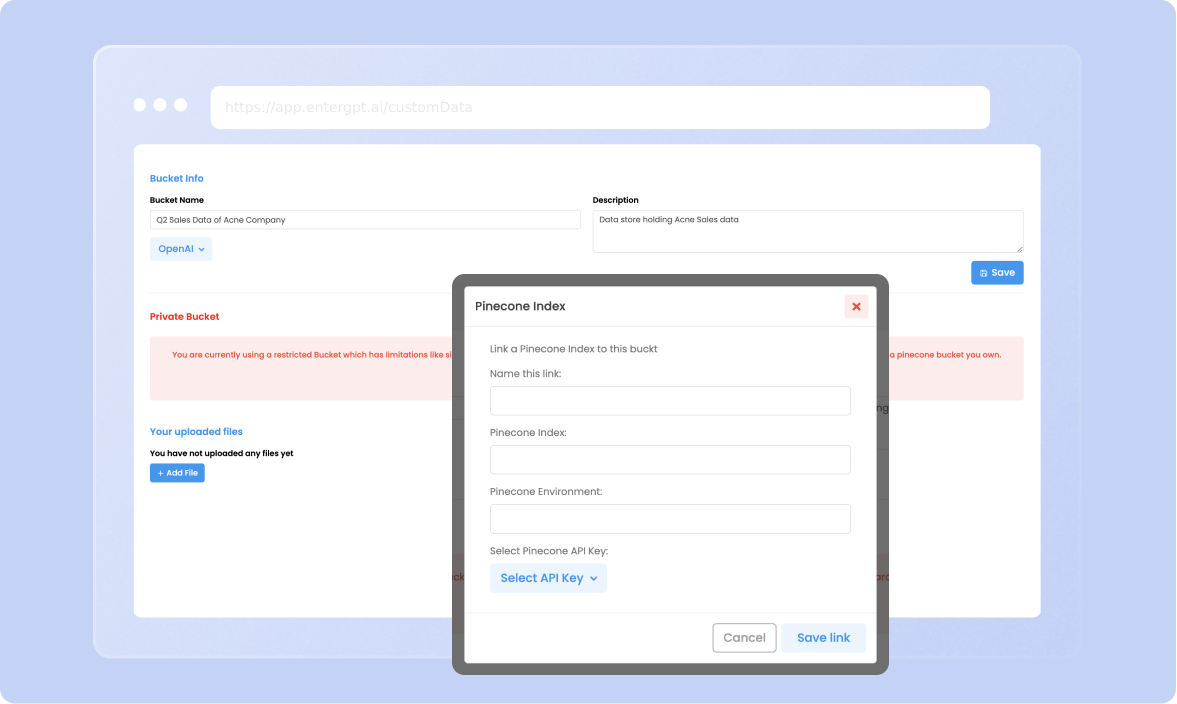

Press the button "+ Link Pinecone Index" to connect one of your own vector stores.

Important: The dimensions of your pinecone index need to be aligned with the embedding model chosen in your EnterGPT bucket. For OpenAI, you need to set the dimensions of 1536 in your pinecone index settings. If you selected the Huggingface model (BERT), your pinecone index dimensions need to be 768.

Note: You must have created an account and an index on the pinecone page. Create a pinecone account here

When linking the index, you need to get the parameters from your pinecone account. The link name is for your own reference. The pinecone key must exist in your Nter.ai API Keys settings in the pinecone section. You get the pinecone API key in the pinecone dashboard under API Keys.

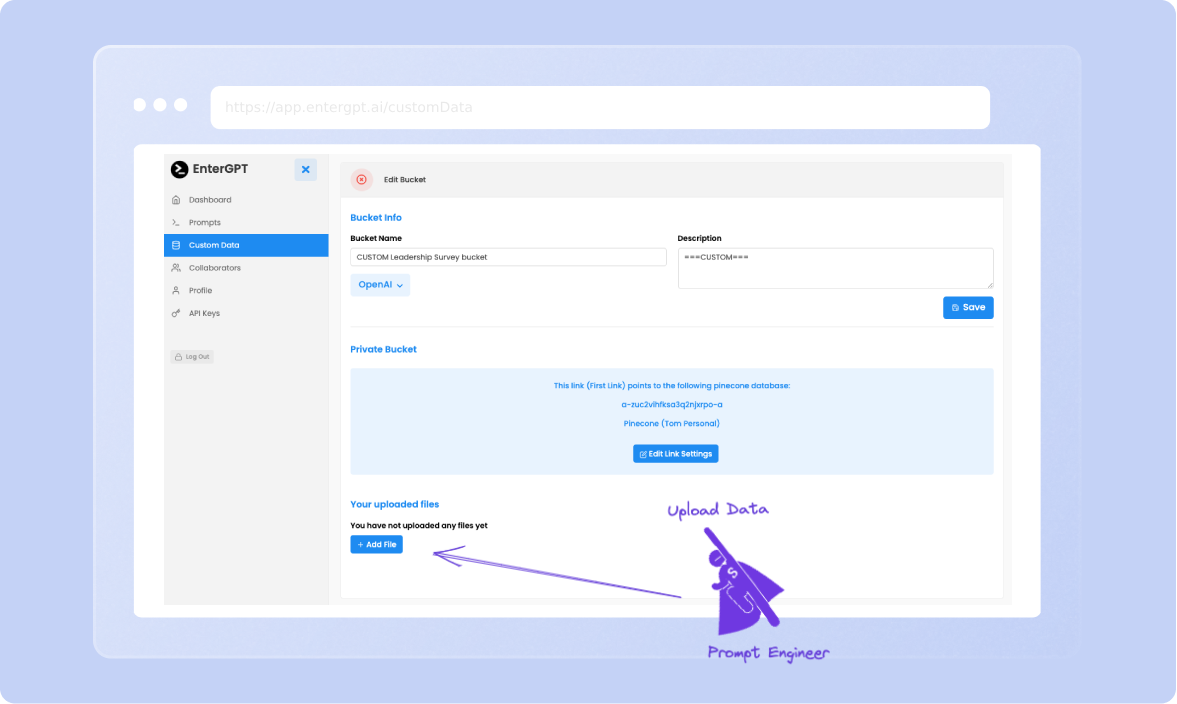

Ingesting your data into the Vector Store

In order to make your data available for the RAG process, the data needs to be embedded and stored to your vector store. Nter.ai does this for you automatically. You can either directly upload a document (e.g. PDF) in your custom data bucket if you want to manually ingest data.

If you want to add data dynamically to your vector store, you can also ingest your data programmatically via our API. Just include the ID of your bucket and the JSON data you want to put to your vector store into the API payload. Find more information about ingesting data via our API here

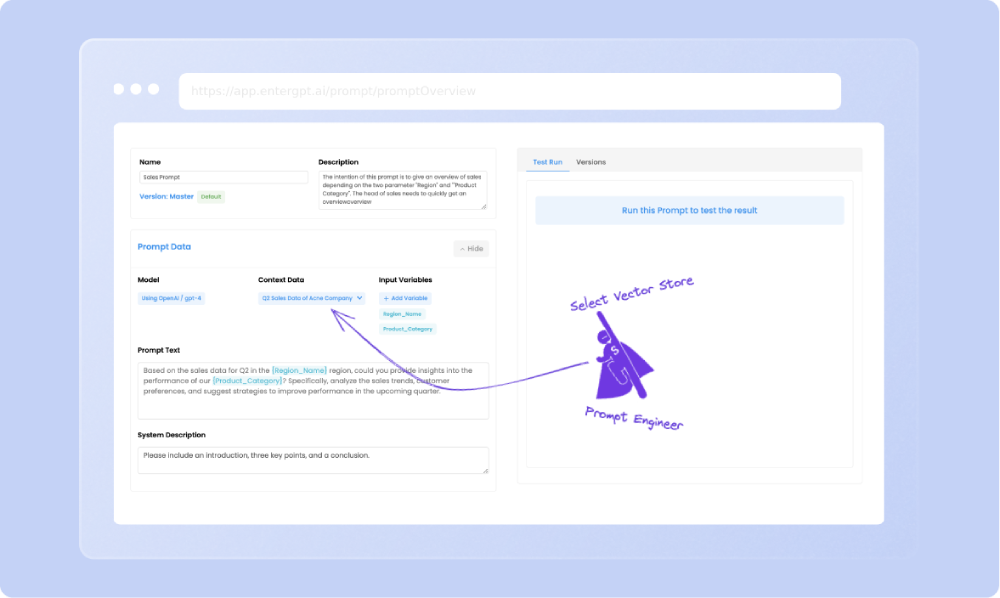

Adding Vector Search to your Nter.ai Chain

Nter.ai automatically chains the vector search into your prompt execution if you added a vector store as a target to your prompts. To be flexible and allow multiple different prompts to be applied to a vector store, you define the vector stores individually in the menu section "Custom Data".

In the free version, you can define one vector store where you can upload a pdf to quickly start testing a prompt with an integrated RAG process.

You can always create custom vector stores which you link to your vector store in Nter.ai. For example a pinecone database. You also need to store your pinecone API key so that Nter.ai can access your database in the RAG-Process. Find more information about API keys in this section.

Once you stored your pinecone API key and defined a datastore connection, you can use it in as many prompts as you like. Go to the prompt you want to connect to the data store. Select the datastore in the dropdown and thats it.

Execute the prompt and find that that the LLM will utilize the specific knowledge from your custom data.